The Standing Person Recognition Algorithm – SPRA provides the capability to detect reflections coming from a standing target (person), which exists in a stationary environment. It is included in SkyRadar's FreeScope ATC I.

Connected mobility, increased activities on the runways but also perimeter surveillance bring new challenges to close range radars.

Our team developed and published the "Standing Person Recognition Algorithm". We included it in our FreeScopes ATC I suite that handles radar data in real time. Trainees, students and vocational learners can apply it (with a mouse click), experiment with it.

We share the maths with all our users, enabling them to apply it in their applications. Or to improve it? Let us try. FreeScopes provides you with the tools to make it even better.

The Concept

The main idea of estimating the correct position and recognizing a standing person in different environments comes from the clutter map concept. Since a standing target is expected to be standing also after a whole radar sweep, the algorithm will store the environment information for a complete radar sweep and estimate the position of the standing person based on the stored background information. Thus, on the next radar sweep, simultaneously we will store the environment reflections, and estimate classified reflections of the motionless person.

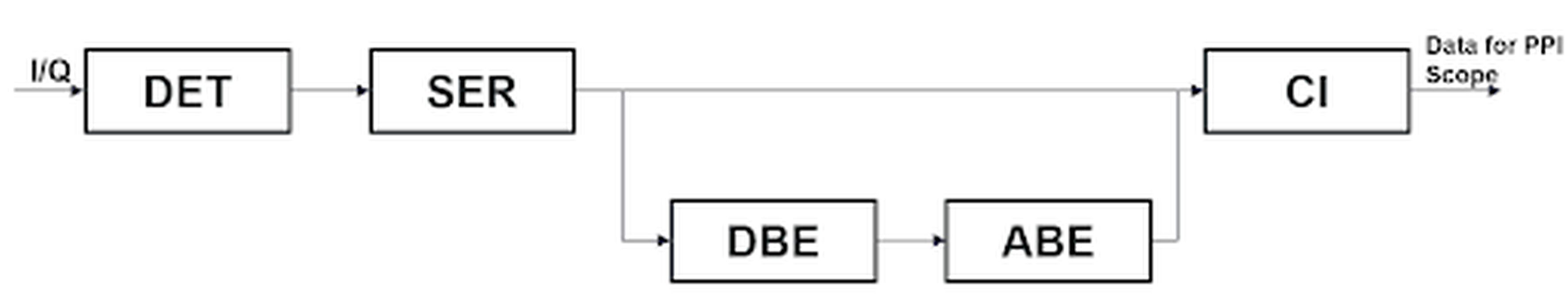

Block diagram of the Standing Person Recognition Algorithm

The estimation path of the algorithm is a function of the distance and angular position of the target, as it can be seen in Figure 1:

Figure 1 SPRA block diagram

In this configuration the SPRA consist of five sub-blocks:

- DET – Detection / Threshold

- SER – Storing Environment Reflections

- DBE – Distance Based Estimation

- ABE – Angular Based Estimation

- CI – Comparing Information

We speak of a composite block.

Understanding Each Block in the Standing Person Recognition Algorithm

In the following, we describe the operation of each sub-block from Figure 1. First, it is always preferably to use a threshold detection to filter out and refine the data which will be used for estimation. In our training environment, we suggest using the Threshold block to be used, which you will find in FreeScopes Basic 1.

Threshold

First, it is always preferably to use a threshold detection to filter out and refine the data which will be used for estimation. In our training environment, we suggest using the Threshold block to be used, which you will find in FreeScopes Basic 1.

Storing Environment Reflections

After detecting the relevant signal, we employ the similar concept of a clutter map from MTD technique in SER. In this step, the environment reflections will be stored for a whole radar sweep in a big matrix. After one whole radar sweep, the information is split into two paths:

- One which forwards the information to the estimation algorithms DBE and ABE

- And the other one, which sends information to CI

Distance Based Estimation

In the Distance Based Estimation – DBE, the stored reflections are used for clustering returned signals from a person. The main idea of this method is to filter out signal returns which are laying in more than 20cm. This means that walls, parked cars, and other standing targets other than person might cover more than 20cm on longitudinal dimension, but a person will be within the 20cm on longitudinal dimension. In this way we localize the regions of interest on the stored environment map on the distance dimension.

Angular Based Estimation

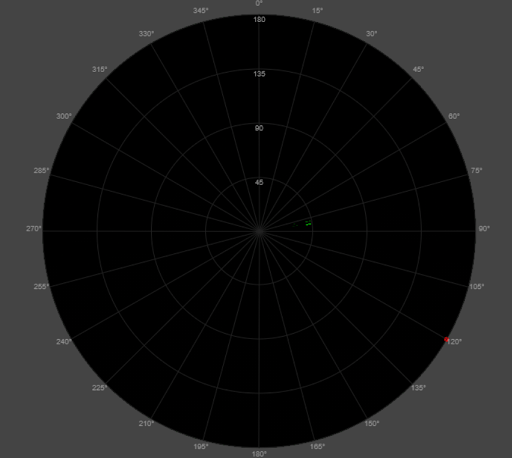

After the DBE, the pre-processed information is forwarded to Angular Based Estimation – ABE method. In this method, the stationary targets are clustered based on the angular positions. Based on different scaling and representing of the distance and angle axis, for the ABE estimation area, we determine three different regions to automatically adjust the angular snippet length. This snippet length is adjusted based on different possible positions of the person, because detecting a person in near range might be detected in larger snippet in comparison with a detected person in far range, where it might cover an angle of less than a degree. These power returns cover continuous snippet positions on the angular dimension. Thus, signal returns which cover less than two degrees in comparison with large stationary targets represents the standing person, as it can be seen in Figure 2:

Figure 2 PPI screenshot of different stationary targets

In this way, standing persons cover the shortest snippets on the angular dimension. These snippets represent the relevant information, which will be forwarded to CI.

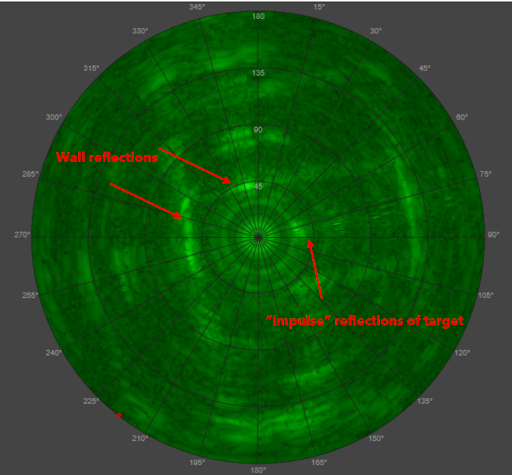

Comparing Information - the CI Method

The Comparing Information Method (CI method) is used for comparing, detecting, and estimating clearer these tracks on the PPI scope. The main aim of the CI is to compare the peaks or main power targets from SER and DBE/ABE, and from here decide if the radar did detect a person or another stationary object. As it can be seen in Figure 3., the output signals of the SPRA algorithm represent clear tracks of a standing targets on PPI scope in comparison with Figure 2:

Figure 3 Standing Person Tracks on the PPI Scope from SPRA

Outlook

The Standing Person Recognition Algorithm SPRA helps to differentiate small standing targets against clutter. It helps for close range surveillance, e.g., when you want to make sure a runway is clear for a landing aircraft. SkyRadar's ATC I offers more enhanced algorithms like Moving Target Indication MTI with Post-Processing or Moving Target Detection. All these algorithms work in real-time with SkyRadar's training radar NextGen 8 GHz Pulse.

Read more articles about the features included in the FreeScopes ATC I module.